Never before in human history has such potential existed for the large-scale digital analysis of text. Thanks to the Google search engine index of the world wide web, and the emerging Google Books project, which aims to index books, an enormous amount of text exists in indexed digital form, and this base is growing constantly. But the mechanisms by which digital texts and their indices are currently used to judge the relative quality, value, or meaning of a work of text are relatively crude as compared to how humans perceive and relate to text, and especially literature, which is text as art. This begs a chain reaction of questions: are there gains to be made in the fields of literature or linguistics by exploiting this digital base of text? Is it possible to derive aesthetic principles sufficiently logical to work as algorithms for the analysis of digital text? Would analysis based on such principles yield new insights into our relationship to language and literature? Could such analysis contribute toward something like a greater computational “understanding” of text, with implications for improving search engine results, speech recognition, and computerized translation? These questions fascinate me.

Never before in human history has such potential existed for the large-scale digital analysis of text. Thanks to the Google search engine index of the world wide web, and the emerging Google Books project, which aims to index books, an enormous amount of text exists in indexed digital form, and this base is growing constantly. But the mechanisms by which digital texts and their indices are currently used to judge the relative quality, value, or meaning of a work of text are relatively crude as compared to how humans perceive and relate to text, and especially literature, which is text as art. This begs a chain reaction of questions: are there gains to be made in the fields of literature or linguistics by exploiting this digital base of text? Is it possible to derive aesthetic principles sufficiently logical to work as algorithms for the analysis of digital text? Would analysis based on such principles yield new insights into our relationship to language and literature? Could such analysis contribute toward something like a greater computational “understanding” of text, with implications for improving search engine results, speech recognition, and computerized translation? These questions fascinate me.

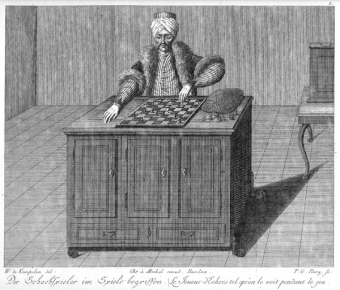

My first major programming project at U.C. Berkeley was a computerized haiku generator. It was a combination of serious inquiry and farce. Employing Madlibs-style templates to slot in verbs, nouns, and modifiers in plausible orders, and with the correct syllable count to satisfy the haiku form, this little program could generate thousands of “haiku” in minutes. But the results were eccentric at best. It was also during this time that IBM’s Deep Blue chess computer beat the world chess champion, Gary Kasparov (one of my heroes), adding a certain sting to my appreciation of artificial intelligence. Finally, I had the pleasure of studying with Stephen Booth while at UC Berkeley, who articulated a theory of aesthetics at once elegant and profound, which he called “precious nonsense.” It was one of the first theories of literary criticism which seemed to respect the art, rather than appropriate it in service to the critic.

Certainly, quantifying a theory as subtle as “precious nonsense” is much more difficult than teaching a computer to count syllables and slot in presorted lists of words into prearranged patterns. Still, I think there are gains to be made in examining the aesthetic elements of text, which remain largely unaddressed in most computerized systems for textual analysis. The music of English, for example, follows a number of well-defined rules. What would a “musicality index,” for example, tell us about a passage of text? How might semantic concordance inform our understanding of the poetic elements of a text?

While organizations like the Association for Computational Linguistics seem to be tackling various means to advance our ability to understand language, interest in such tools seems to come from linguists, psychologist, and speech therapists right now. What about literary critics? There seems to be a kind of knee-jerk reaction against the idea of quantified aesthetics from most literary-minded individuals with whom I have discussed this, as though such analysis might somehow demean the art. Me, I think art can take it. And, as I look back on seemingly disparate past interests and experiences, I see how this new line of inquiry seems to weave such elements together. Who knows, ultimately, where this might lead? For now, I am enjoying wondering about these questions.